While “data contracts” are having a bit of a moment, they’re nothing new. And over-sized focus on that specific interface—or any other—risks1 putting the cart before the horse.

Data contracts are brilliant for the right use cases, but if over-used will slow your data discovery to the speed of bureaucracy.

If data contracts are but one of many tools, and your data stack is your tool belt, then what’s the skill of deciding the right tool for the right job?

Risk-aware data management2.

Back to the future

To go further forward, we have to go way back—and a little bit out.

As the industrial revolution enabled a massive acceleration of technology and infrastructure, eager engineers built marvels unlike any that had been seen before. Unfortunately, safety standards lagged behind innovation. Infrastructure failed, leading to great loss of life and a more solemn understanding of the duty of an engineer. Those lessons continued throughout the 20th century, leading to the robust risk-management frameworks that the physical3 engineering disciplines use today.

Notably, physical engineering projects have direct material, budgetary, and schedule constraints that don’t tolerate the idealism of “eliminate every risk”.

So, physical engineering projects have to manage risk in a way that balances safety, financial success, and resources.

For data practitioners who work in the physical engineering space, these types of thinking will be familiar. Some amazing data systems (like machine-learning driven maintenance plans for aircraft) directly serve these risk management approaches.

But for data practitioners (and software engineers) on the more startuppy, techy side of things, these ideas of risk management can be more distant for a few reasons:

Entrepreneurs and those who join small startups inherently have a high risk tolerance

Tech startups rarely make decisions that can cause physical harm4

Virtual systems are infinitely more scalable than physical systems—the cost of a full backup database is much lower than the cost of a full backup factory

And while software engineers and IT professionals are having to develop more sophisticated skills around cyber-security risk, risk management isn’t a core teaching for those ramping up in the tech data space—but it should be5.

Techy data risks

While the risks of physical harm, security vulnerabilities, and regulatory non-compliance tend to be more tangible, risk-aware thinking expands far beyond these areas.

Risk-aware thinking can help bring attention to murky ethical issues, and it adds value to mundane business-as-usual processes too.

Business-as-usual data for tech companies usually falls into one of two categories—operational or strategic. It’s helpful to understand the risks inherent in each.

Operational data risks

Operational data drives processes like emailing prospect lists, scoring leads, surfacing subscriptions that are likely to churn, etc. Sometimes, this data lives entirely in a customer relationship manager (CRM) or customer data platform (CDP), while other times it sprawls across systems and sources.

Operational data problems lead to shaky, ineffective operations. For example: emailing “new customer” discount codes to people who’ve already purchased, failing to provide warnings before shutting an account off due to missed payments, accidentally exposing sensitive information broadly, etc.

The material impact is usually felt as lost revenue, either directly (as through failure to remove features from a churned account) or indirectly (as through failing to acquire new customers due to poor reputation). Impacts can also be internal—such as sales reps quitting because they aren’t being paid correctly6.

Strategic data risks

Strategic data is more often what people think of when conjuring the image of a “data practitioner”—metrics, dimensions, trends, and forecasts that (theoretically) improve the quality of business decisions.

Inaccurate data can lead to poor decisions that have material consequences. For example, the product team decides to deprecate a feature due to low usage, only to find out that usage events were severely under-counted due to a bug, and the feature was actually a fan favorite.

Reconciling risk tolerance

Startups move a mile a minute, driven by risk-tolerant execs with a “move fast and break things” approach. Tensions rise when risk-averse data professionals bring a “please stop breaking things” mindset, lamenting the irresponsibility of those risk-tolerant execs.

The path forward—the hockey-stick launching ramp into the future—isn’t risk-tolerance or risk-aversion: it’s risk awareness.

A strong risk-management approach brings the best of both worlds:

“Move fast and break (the right) things.”

Dimensions of risk management

Any given risk has two important traits when deciding how to manage it:

How likely is this risk to happen?

How bad will it be if it happens?

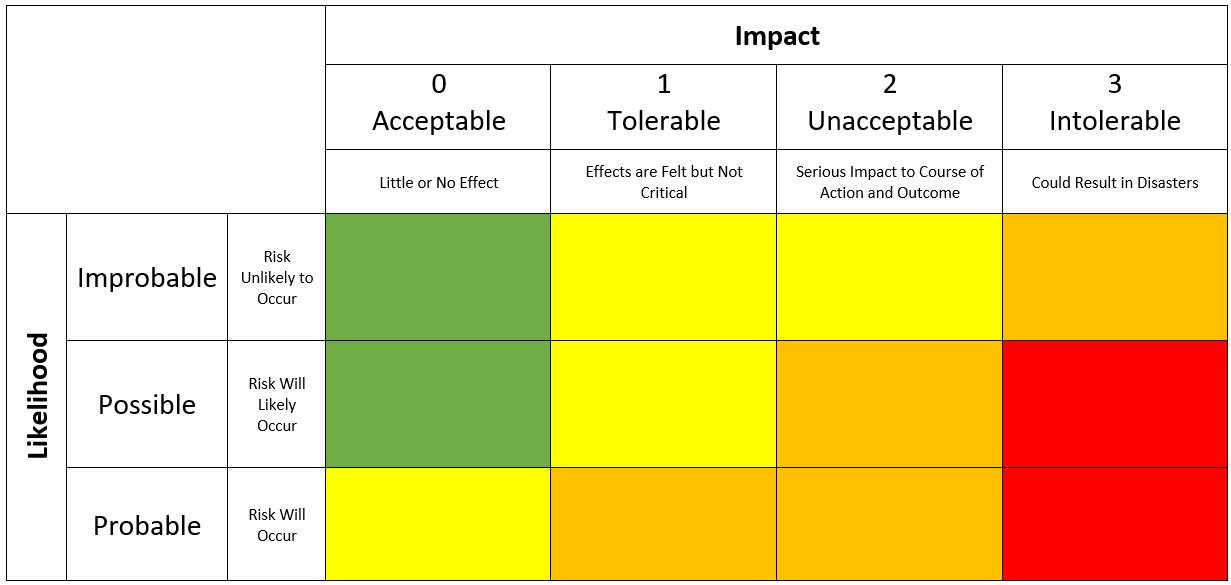

These two dimensions create a matrix. Here’s an example from TMS about project risk management:

Usually, the goal is to get each risk into the yellow or green categories. Red risks should be addressed first, followed by orange risks.

Without a framework like this, it’s easy to get caught up on likelihood or impact/severity alone, wasting time on frequent risks that have little impact or scary risks that will almost never happen.

Paper cuts are painful, but it’s not worth wearing gloves all the time to 100% eliminate paper cut risk7.

On the flip side, focusing all your attention on unlikely risks will turn you into Aunt Josephine.

This two-dimensional framework helps you identify which risks to prioritize.

Importantly, a risk can be mitigated in two different ways:

Decrease the impact when it happens

Decrease the likelihood of it happening

The goal is NOT to eliminate the risk—it’s to bring it to an appropriate level. This is where a lot of data practitioners get overwhelmed, focusing too much on eliminating a high-visibility risk and leaving other serious risks lurking in the shadows.

This can happen with an idea like data contracts—spending a lot of time and energy ensuring that all the data conforms to expectations, but neglecting the risks of slowing business velocity.

An example

When I first started working with the Solutions Partner Program at HubSpot (it was the Agency Partner Program at the time) we had a good process for the legal and financial side of processing when two partner accounts merged with each other, but we didn’t have a consistent way of reflecting this in our operational system. That lead to some stickiness with partner experience, where old accounts stayed open and it could be confusing for both partners and our internal reps. This was usually a mild to moderate impact on customer experience.

Initially, this risk was acceptable because the frequency was low. But as HubSpot scaled, the frequency increased, bringing this risk up to an unacceptable level.

So, it was necessary to reduce the impacts of a merger. This is where eliminating the risk is tempting—we could have taken on a large project to build perfect automation to elegantly represent mergers. But using resources there would take them away from somewhere else. Because I was thinking in a risk-based way, it was clear that we didn’t need to eliminate this risk, just reduce it to an acceptable level.

We didn’t have much ability to change the impacts of old accounts staying open, but we did have an opportunity to decrease the likelihood of a merger occurring without consolidating accounts. We already had a manual review process for the legal side, so we could add to that manual process with a high rate of compliance—as long as the added steps were quick and clear. I created some data processing structures and documentation to semi-automate the process, ensuring that we’d have a high rate of completion for the additional manual steps.

We were able to knock out over 95% of the risk of old accounts remaining open with just a small fraction of the effort that would have been required to 100% eliminate the risk. Customer satisfaction went up and business velocity stayed high.

More on this in practice

Finance data, especially that reported to the street, has serious consequences if inaccurate. Reducing the frequency of inaccurate data and having a clear and appropriate correction process for when errors do occur are both effective mitigation strategies.

Conversely, product usage data needs only be directionally correct to provide value. 98% correct is perfectly acceptable here in a way that for finance, it’s not.

So when you have data pipelines failing due to upstream changes, it’s important to ask two questions for each source:

How often do we expect these failures to occur?

What’s the bottom-line impact when these failures occur?

Let’s say we expect a source to fail once a year when the product team releases major revisions that rename things. It takes about a week of scrambling to fix this, delaying the quarterly readout, but not significantly impacting decision quality.

We’ve also estimated that it will take about four weeks of back-and-forth to settle on a “data contract” (i.e. API interface with SLAs) for this data ingestion.

Our break-even on this time investment is four years—will we even need this data for that long? Are there other areas that would benefit from those extra three weeks of time this year?8

In this specific case, a “data contract” probably isn’t worth it.

The inverse can easily be true—two weeks spent on a “data contract” saving a quarter’s worth of thrash and scramble.

It’s important to have a sense, for the particular use case at hand, of which way that opportunity cost is going to fall.

“What is the risk level of this data?” is an equally important question as “how do we treat high-risk data?”

The language of risk management

All trade-offs, whether in budget, warehouse space, or person-hours, are ultimately questions of opportunity cost.

I see most tech people frame these trade-offs as value-add (e.g. return on investment), but this can only provide limited clarity on what to do next. What do you do if you have too many good ideas—too many things that provide high ROI?

Risk-based thinking asks the same questions—but inside out. Not “what good thing will happen if we do this” but “what bad thing will happen if we don’t?”

Making money is good. Not making money that we could have made is bad.

Making customers happy is good. Losing customers that we didn’t make happy enough is bad.

Here’s where we can start to tease out the unique benefit of risk-based thinking. Making happy customers happier may provide less value than making disgruntled customers ambivalent.

Oftentimes, it’s easier to identify and prioritize what we don’t want than what we do want. Of course we want to please all of our customers, but it’s much easier to deal with sassy responses in feedback forms from loyal customers than to suffer massive churn from on-the-fence customers.

Turning your happy customers into fanatic promoters is definitely an upside, but it’s wise to ensure your ship isn’t sinking before throwing a party on deck.

Conclusion

No matter how startup funding, cheap virtual storage, and hustle culture blur the lines, all companies have finite resources.

Risk management is more important than ever as VC funding slows, compute costs mount, and humans resist the detriments of ever-expanding work weeks.9

Remember, using a risk management framework doesn’t make you risk-averse—it makes you risk-aware. It gives you special leverage in how you and your team spend your time, energy, and other resources.

Focusing only on upside can leave prioritization murky and serious problems lurking. Focusing on risks captures both upside and downside—leading to maximum leverage overall10.

There are few universally best practices. Most things—whether data contracts, documentation, version control, etc—are brilliant in some contexts and overkill in others.

We may lament stubbing a toe, but wearing shoes 100% of the time would be worse than the occasional pain. Conversely, the cost of crashing your bicycle is so great that it’s worth the mild discomfort of a helmet, even with a very low crash rate.

Risk-based thinking helps answer so many of the questions that plague data practitioners: What project should we work on next? How statistically significant does this analysis need to be? How much time should I spend tracking down why 2% of this column is null? Do I need a data contract for this source, and what should my expectations be? Should we use data for this decision at all, or rely on other inputs? When should I create a standards document? How detailed should it be? Should I use a feature branch flow?

It’s turtles11 risk-aware thinking all the way down.

So, fellow data practitioners—what do you think? Should risk-aware data management be the next (old) big thing?

See what I did there?

Lest you think I’ve coined this phrase, here’s a Metagenomics paper from 2013

mechanical, electrical, civil, industrial, chemical engineering, and so on

Ethical harm is another matter, and we’ll get to that soon. These traits of tech startups illustrate how low risk tolerance plus low appearance of harm can lead to incredibly risky, haphazard, and ultimately harmful decisions.

unless you’re a hand model

You’ve heard about the time value of money, but stay tuned for my future post on the time value of time

Not to mention the mass-disabling event that is Long COVID

Remember, this isn’t about eliminating all risks. To keep the startuppy folks on your side, you have to be strategic about which risks to mitigate and which to live with.

💜 this. One thing I would say is that the data contracts investment is much higher when you have the back and forth negotiation.

With the producer only contract you can get a lot of the benefit with very little investment. I feel like it's hard to argue against doing at least that with any useful data source.

What a read ! Thanks Ashely for this one. Looking forward to reading more on time value of time !